Connected with both augmented reality (AR) and virtual reality (VR), spatial computing is the technology we use to blend our physical and digital experiences and allow both physical and digital objects to coexist in real time. This is a concept we’ve termed as “converged experiences.”

We touched on the importance of spatial design in our recent whitepaper, Towards Industry 5.0: How Converged Experiences Empower the Smart, Connected Worker. Essentially, spatial design describes the methods and techniques used to design converged experiences through sophisticated user experience (UX), user interfaces (UI) and more, while spatial computing is the technology that makes converged experiences possible. To expand on this topic, two of CC’s UX designers, Alice McCutcheon and Matt Rose, discuss using spatial design for converged experiences, exploring the use cases, challenges and impact this technology will have in the near future.

How do you envision the integration of converged experience technologies transforming everyday life in the next five years?

Alice McCutcheon: Screens as we know them today are portals to the digital world, limited by flat displays. Converged experiences created through spatial design open up a new world filled with interactable canvases which are not limited to the size of our screen. Assuming the increasing adoption of these technologies continue as hardware constraints are removed, it’s easy to imagine a personalised and responsive experience for users that adapts to our tasks throughout the day.

Whether it be someone working in a virtual office with a personalised workspace, collaborating on tasks with their colleagues or experiencing a fully immersive football game from home, the user will be able to see all the stats they care about most for a truly enhanced experience.

Matt Rose: I agree – in fact, we’re already seeing how early adoption of converged experiences is changing the way people work, interact and learn. For example, in healthcare, surgeons are seeing the benefits of using AR in precise surgeries, while patients are benefitting from personalised, immersive rehabilitation programmes.

In education, children are interacting with 3D educational content that make complex subjects more accessible and engaging. All of this is still in its early days, and as the technology progresses and becomes more affordable, converged experiences will only become more widespread — and more useful.

Looking ahead, what emerging technologies do you believe will have the greatest impact on the future of converged experiences?

Alice McCutcheon: The development of artificial intelligence (AI) and machine learning (ML) will be key for creating more responsive experiences. AI and ML can enhance the real-time understanding of the user’s environment and their interactions, as well as predicting their intentions and behaviours. This could help contribute to hyper-personalised experiences, with machine learning models predicting user preferences, needs and actions to create more engaging and streamlined experiences.

Similarly, haptic feedback technology could add a tactile dimension to virtual interactions, making them feel even more realistic. For example, in a surgery use case, adding haptics could enable surgeons to feel the texture, resistance or subtle movements of tissues or organs as they perform remote surgical procedures or medical training.

Matt Rose: Some obvious ones for me would be the new generation of XR devices that are currently being developed. These are lighter, more powerful and offer a better user experience than existing devices, and gaming engines like Unity can provide the infrastructure everything is run on.

Then there’s some really interesting things happening in 3D asset capture, like NeRFs (Neural Radiance Fields) which enable the creation of high-fidelity 3D models from fairly limited image information. Beyond this I believe edge computing and low-latency networks will be essential for real-time applications like surgery. And, my favourite by far, is human machine understanding (HMU) which integrates emotional state sensors allowing the system to adapt in real-time to the user. All these emerging new technologies will be game-changing when it comes to creating the best user experience.

What are the most exciting possibilities for converged experiences in the fields of construction and manufacturing?

Alice McCutcheon: For one, we could make the ‘sci-fi movie’ dream a reality – that is, making an AR experience so streamlined and intuitive that it is perfectly integrated into our lives. In construction and manufacturing specifically, real-time AR integrations could make converged experiences a natural extension of existing workflows, such as workers overlaying blueprints and 3D models onto physical spaces, creating records of work done and following guidance on assembling structures. They could also work with remote experts to provide real-time support, viewing the workers perspective to offer contextual advice.

Matt Rose: The main thing with spatial design, and its importance for creating converged experiences, is being able to overlay digital information onto a physical environment. As Alice said, this is every sci-fi movie made true. So, because this information is “in context”, it becomes more intuitive, reducing cognitive load and enhancing decision-making and efficiency — all positives for the industrial workplace.

And because the information comes from real-time sensor data and digital twins, it makes things such as maintenance and troubleshooting much easier, especially as by sharing views and digital assets in real-time it would enable collaboration between multiple users, whether they are in the same location or spread across the world.

What are the biggest spatial computing and spatial design challenges when creating immersive environments for converged experiences?

Alice McCutcheon: I think one of the big challenges designers face is creating interfaces that seamlessly integrate with real-world environments. That means creating interfaces that have realistic shadows or lighting based on the environment, or reflections of the user to create a total feeling of immersion.

Occlusion in AR can help with this challenge, creating a sense of digital assets responding to the physical environment. That said, creating context-aware content without overwhelming the user and maintaining user focus is still a tricky balance to maintain. Equally, creating consistent experiences across different devices and platforms can be complex.

Headsets with varying capabilities require scalable design systems, which we designers can shape. Finally, as XR integrates increasingly with IoT, designing secure and privacy-conscious interfaces is essential, creating trust with the user that their data won’t be compromised, especially as headsets feature the capturing of camera feed. It’s no easy task, but one we feel is worth the effort given the benefits it can offer.

Matt Rose: The biggest challenge for a designer has to be thinking in three dimensions. It’s definitely a shift in mindset when you’re used to designing for two-dimensional screens.

As Alice touched on, AR also brings a new set of design expectations with it: how will something look when it’s further away? How will it sound or behave when interacted with? It can be more complex than designing an app, but also much more fun.

How do you approach the challenge of designing intuitive spatial interfaces, particularly for users who may be unfamiliar with converged experiences?

Alice McCutcheon: Looking at what currently exists is a good starting point. Although applications right now are limited, testing what is available already can help us to not only see first-hand what works well, but also what doesn’t work well. Is there an app that makes you feel particularly motion sick? Or is there a game where it’s really hard to understand how to interact with things? Taking note and understanding what might be causing that poor or great experience can be good to have in mind when designing your own experience.

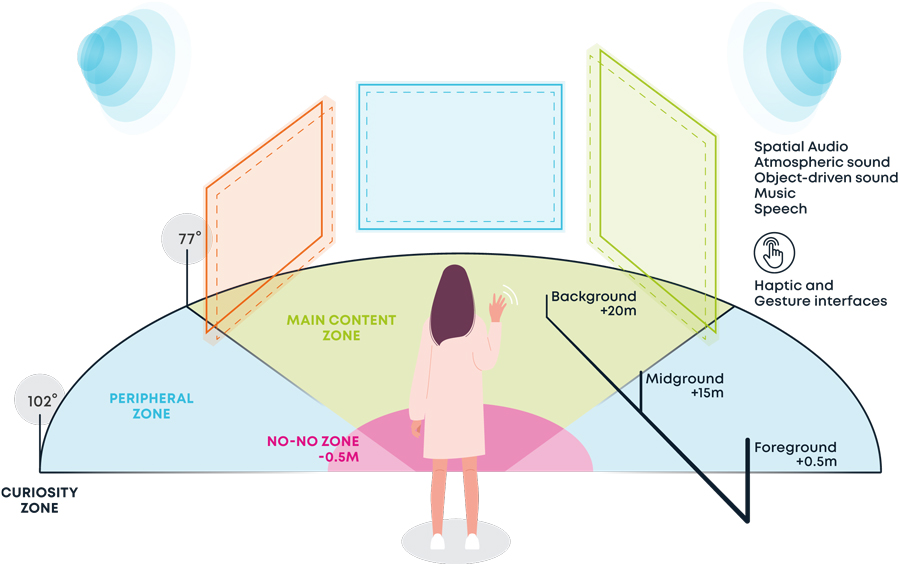

Technology found in gaming can be particularly useful as a lot of skills and techniques that already exist in the gaming world can be transferred into new fields, such as Industrial and Consumer. Thinking of the XR world as a fully immersive 360 experience can also be insightful, as understanding depth of field, where spatial audio might support an experience and dealing with parallax are all important to understand for the design process. Then starting with low fidelity designs and using apps such as Gravity Sketch can be a good way to get ideas into 3D.

Matt Rose: There are a few key things to consider when designing for users unfamiliar with spatial interfaces. But in essence, clarity and simplicity is always a good place to start. Providing clear visual indicators and affordances will help guide users on where and how to interact with spatial elements. Starting with basic functionality, information and interactions, and then progressively introducing more complex features will help users learn quickly without getting overwhelmed.

The future of converged experiences

From remote surgery to industrial and manufacturing applications, converged experiences enabled by spatial computing and spatial design will change the way we interact with the world around us. Explore the topic further in our latest whitepaper, and if you’re interested in discussing converged experiences and spatial design further, reach out to continue the conversation.